Chatbots like Google's LaMDA or OpenAI's ChatGPT are not sentient nor that intelligent. Nonetheless, boffins believe they can use these large language models to simulate human behavior inspired by one of the world's most popular early computer games and some AI code.

The latest effort along these lines comes from six computer scientists – five from Stanford University and one from Google Research – Joon Sung Park, Joseph O'Brien, Carrie Cai, Meredith Ringel Morris, Percy Liang, and Michael Bernstein. The project looks a lot like an homage to the classic Maxis game The Sims, which debuted in 2000 and lives on at EA in various sequels.

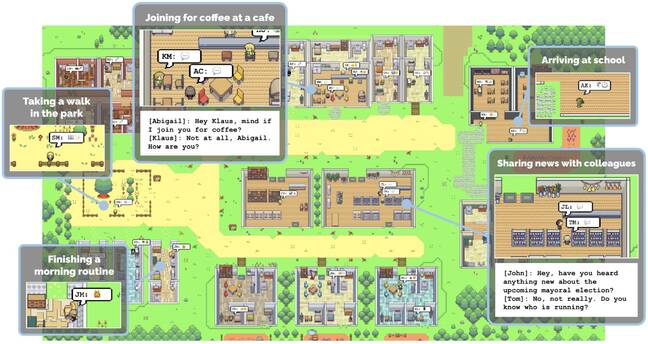

Screenshot from Park et al's paper on their ChatGPT-powered software, illustrating what each agent in the simulation is getting up to and their conversations

As described in their recent preprint paper, "Generative Agents: Interactive Simulacra of Human Behavior," the researchers developed software architecture that "stores, synthesizes, and applies relevant memories to generate believable behavior using a large language model."

Or more succinctly, they bolted memory, reflection (inference from memories), and planning code to ChatGPT to create generative agents – simulated personalities that interact and pursue their own goals using text communication in an attempted natural language.

"In this work, we demonstrate generative agents by populating a sandbox environment, reminiscent of The Sims, with twenty-five agents," the researchers explain. "Users can observe and intervene as agents plan their days, share news, form relationships, and coordinate group activities."

To do so, visit the demo world running on a Heroku instance, built with the Phaser web game framework. Visitors can interact with a pre-computed session replay as these software agents go about their lives.

The demo, centered around an agent named Isabelle and her attempt to plan a Valentine's Day party, allows visitors to examine the state data of the simulated personalities. That is to say, you can click on them and see their text memories and other information about them.

For example, the generative agent Rajiv Patel had the following memory at 2023-02-13 20:04:40:

Hailey Johnson is conversing about Rajiv Patel and Hailey Johnson are discussing potential collaborations involving math, poetry and art, attending mayoral election discussion and town hall meetings for creating job opportunities for young people in their community, attending improv classes and mixology events, and Hailey's plan to start a podcast where Rajiv will be a guest sharing more about his guitar playing journey; and they have also discussed potential collaborations with Carmen Ortiz about promoting art in low-income communities to create more job opportunities for young people.

The purpose of this research is to move beyond foundational work like the 1960s Eliza engine and reinforcement learning efforts like AlphaStar for Starcraft and OpenAI Five for Dota 2 that focus on adversarial environments with clear victory goals and a software architecture that lends itself to programmatic agents.

"A diverse set of approaches to creating believable agents emerged over the past four decades. In implementation, however, these approaches often simplified the environment or dimensions of agent behavior to make the effort more manageable," the researchers explain. "However, their success has largely taken place in adversarial games with readily definable rewards that a learning algorithm can optimize for."

Large language models, like ChatGPT, the boffins observe, encode a vast range of human behavior. So given a prompt with a sufficiently narrow context, these models can generate plausible human behavior – which could prove useful for automated interaction that's not limited to a specific set of preprogrammed questions and answers.

But the models need additional scaffolding to create believable simulated personalities. That's where the memory, reflection, and scheduling routines come into play.

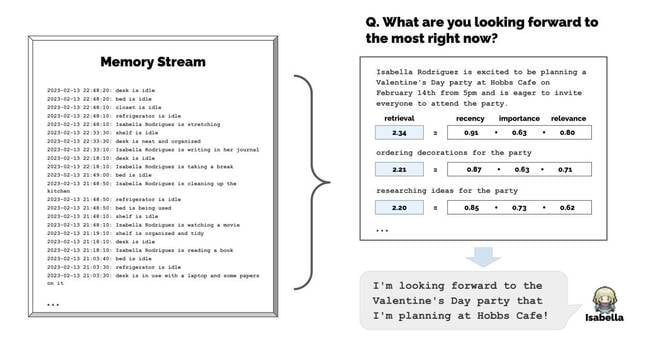

"Agents perceive their environment, and all perceptions are saved in a comprehensive record of the agent’s experiences called the memory stream," the researchers state in their paper.

"Based on their perceptions, the architecture retrieves relevant memories, then uses those retrieved actions to determine an action. These retrieved memories are also used to form longer-term plans, and to create higher-level reflections, which are both entered into the memory stream for future use."

The memory stream is simply a timestamped list of observations, relevant or not, about the agent's current situation. For example:

- (1) Isabella Rodriguez is setting out the pastries

- (2) Maria Lopez is studying for a Chemistry test while drinking coffee

- (3) Isabella Rodriguez and Maria Lopez are conversing about planning a Valentine’s day party at Hobbs Cafe

- (4) The refrigerator is empty

These memory stream entries get scored for recency, importance, and relevancy.

Reflections are a type of memory generated periodically when importance scores exceed a certain threshold. They're produced by querying the large language model about the agent's recent experiences to determine what to consider, and the query responses then get used to probe the model further, asking it questions like What topic is Klaus Mueller passionate about? and What is the relationship between Klaus Mueller and Maria Lopez?

The model then generates a response like Klaus Mueller is dedicated to his research on gentrification and that is used to shape future behavior and the planning module, which creates a daily plan for agents that can be modified through interactions with other characters pursuing their own agendas.

This won't end well

What's more, the agents successfully communicated with one another, resulting in what the researchers describe as emergent behavior.

"During the two-day simulation, the agents who knew about Sam’s mayoral candidacy increased from one (4 percent) to eight (32 percent), and the agents who knew about Isabella’s party increased from one (4 percent) to twelve (48 percent), completely without user intervention," the paper says. "None who claimed to know about the information had hallucinated it."

There were some hallucinations. The agent Isabella had knowledge of the agent Sam's announcement about running for mayor even though the two never had that conversation. And the agency Yuriko "described her neighbor, Adam Smith, as a neighbor economist who authored Wealth of Nations, a book authored by an 18th-century economist of the same name."

However, things mostly went well in the simulated town of Smallville. Five of the twelve guests invited to the party at Hobbs cafe showed up. Three did not attend based on scheduling conflicts. And the remaining four had expressed interest but did not show up. Pretty close to real life then.

The researchers say that their generative behavior architecture created the most believable behavior - as assessed by human evaluators - compared to versions of the architecture that disabled reflection, planning, and memory.

Limitations

At the same time, they conceded their approach is not without some rough spots.

Behavior became more unpredictable over time as memory size increased to the point that finding the most relevant data became problematic. There was also erratic behavior when the natural language used for memories and interactions failed to contain salient social information.

"For instance, the college dorm has a bathroom that can only be occupied by one person despite its name, but some agents assumed that the bathroom is for more than one person because dorm bathrooms tend to support more than one person concurrently and choose to enter it when there is another person inside," the authors explained.

Similarly, generative agents didn't always recognize that they could not enter stores after they closed at 1700 local time - clearly an error. Such issues, the boffins say, can be dealt with through more explicit descriptions, such as describing the dorm bathroom as "one-person bathroom," instead of a "dorm bathroom" and adding normative operating hours to store descriptions.

The researchers also note that their approach was expensive – costing thousands of dollars in ChatGPT tokens to simulate two days – and that further work needs to be done to address bias, inadequate model data, and safety.

Generative agents, they observe, "may be vulnerable to prompt hacking, memory hacking – where a carefully crafted conversation could convince an agent of the existence of a past event that never occurred – and hallucination, among other things."

Well, at least they're not driving several tons of steel at high speed on public roads. ®

"like this" - Google News

April 11, 2023 at 02:24PM

https://ift.tt/bRTCerD

What if someone mixed The Sims with ChatGPT bots? It would look like this - The Register

"like this" - Google News

https://ift.tt/h0iG9xm

Shoes Man Tutorial

Pos News Update

Meme Update

Korean Entertainment News

Japan News Update

No comments:

Post a Comment